Just because information is being conveyed between a system and its environment doesn’t tell us anything about its “meaning,” though.

Shannon’s formulation of information dealt with informational quantification and accuracy, but remained entirely mute on how to assess whether information was actually significant to an entity, whether it was important or of value to some system in context.

But that is precisely the sort of “meaning” we are interested in here.

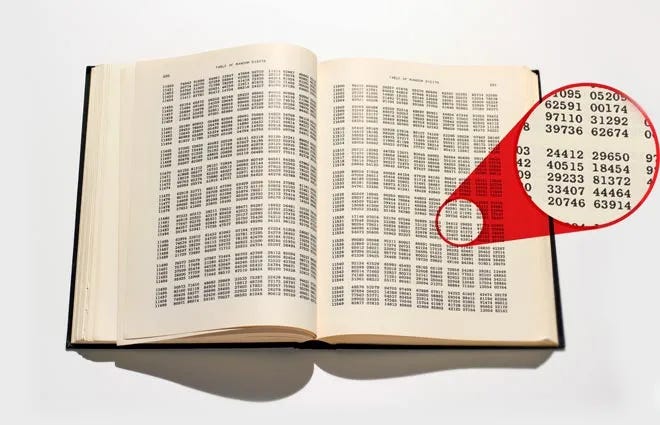

According to Shannon, I could send you an email containing billions of completely random numbers, and so long as you accurately received what I sent, you had gained a good amount of “information.”

Clearly, this is a very impoverished notion of information. It ignores the crucial question of what information is about, what it might be for, and thus its “semantic” significance.

Recently, however, complexity theorists have been able to fill in what’s missing from this incomplete account. Specifically, they have identified how to articulate meaning within information theoretical terms.

Keep reading with a 7-day free trial

Subscribe to Brendan Graham Dempsey to keep reading this post and get 7 days of free access to the full post archives.